Is CloudFlare Always Online a leech?

As a Tor (The Onion Router) user I already have a negative impression of CloudFlare due to their captcha trolling. It therefore gives me no joy to see CloudFlare Always Online circumventing my hotlink protection in order to “cache” my content on their service.

What is CloudFlare Always Online

According to CloudFlare: With Always Online, when your server goes down, CloudFlare will serve pages from our cache, so your visitors still see some of the pages they are trying to visit.

Sounds great or what? CloudFlare’s definition of a downed server however implies that the “downed” server still returns HTTP 502 or 504 response codes (always read the fine print huh).

What is the problem with CloudFlare Always Online

CloudFlare powers a lot of scam sites that don’t produce their own content but instead takes what they need from other sites. When a scam site uses my images to promote their crap, they are linking directly to images hosted on my server and stealing my bandwidth. To battle this issue webmasters implement hotlink protection to block requests not originating from a predetermined list of domains. The HTTP header field used to determine the origin of the request is called the HTTP referer.

However, CloudFlare is aware that websites implementing hotlink protection will interfere with their service. The solution: if a server returns a 403 forbidden response then simply drop the HTTP referer on the next request. Since blocking empty referers is a very bad idea, CloudFlare will walk away with the prize.

| Requested file | Status | Referer | User-agent |

|---|---|---|---|

| /bioshock.png | 403 | “http://scam.tld” | “CloudFlare-AlwaysOnline” |

| /bioshock.png | 200 | “-“ | “CloudFlare-AlwaysOnline” |

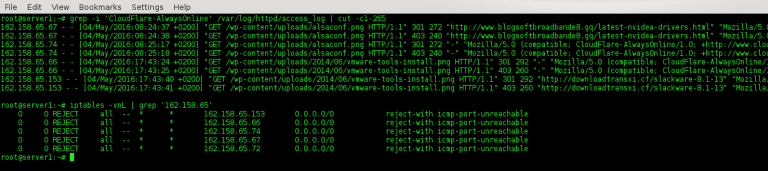

CloudFlare AlwaysOnline blocked and fed to the firewall after a user agent block.

By following the link to the Always Online sales pitch, one feature stands out:

Are you here because someone hotlinked to your site? CloudFlare also can help prevent hotlinking with ScrapeShield.

So alright I should pay CloudFlare to block CloudFlare, that’s awesome :D

Since Always Online ignores robots.txt, the only viable solution is to block their user agent identified as “CloudFlare-AlwaysOnline/1.0”. Below is the ruleset I’m currently using to block unwanted user agents. The list contains a mix of SEO services and misbehaving bots.

<IfModule mod_rewrite.c>

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} ^.*(blexbot|mj12bot|masscan|photon|semrushbot|ahrefsbot|orangebot|moreover|exabot|zeefscraper|smtbot|yacybot|xovibot|cloudflare-alwaysonline|haosouspider|kraken|steeler|cliqzbot|linkdexbot|megaindex|sogou|yeti|siteLockspider|telesphoreo).* [NC]

RewriteRule ^(.*)$ - [L,R=403]

</IfModule>