How to configure Hugo as a Tor hidden service

After migrating my blog from WordPress to Hugo, I wanted to find a simple solution that allowed me to mirror my blog content effortlessly to my hidden services. As Hugo is a static content generator, I didn’t have the opportunity to dynamically rewrite content on the fly by pulling the HTTP host from the current request.

The interesting part of the challenge was to find a solution that would let me point three virtual sites to the same web root, thus replicating my original WordPress configuration. Likewise, I only want to maintain a single local Hugo installation.

Prerequisites

Tor should already be installed and running with hidden services configured. Otherwise, visit the Tor Project and read up on their documentation.

Replicating local and remote directory structure

I’m using Nginx server blocks (virtual hosts) to serve my content. The root directory for my blog was previously /var/www/blog.paranoidpenguin.net/html/. To allow serving content for additional websites (onion services) under the same (web) root, I’ll add an additional level of subdirectories below the html folder.

- The folder blog will contain the content for my clearnet site (blog.paranoidpenguin.net)

- The folder onionv2 will contain the content for my v2 onion address (slackiuxopmaoigo.onion)

- The folder onionv3 will contain the content for my v3 onion address (4hpfzoj3tgyp2w7sbe3gnmphqiqpxwwyijyvotamrvojl7pkra7z7byd.onion)

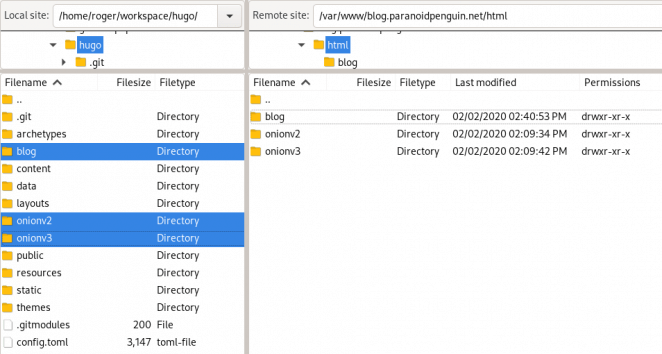

I’ll use an identical directory structure from within my local Hugo installation when building each website using Hugo’s --destination flag. That should make it easy to remember what goes where. That leaves me with the following local and remote directory structure:

A split view of my local Hugo installation and the web root for the remote server.

Hugo flags and environment variables

In theory, Hugo provides us with the functionality we need to mirror content across multiple domains.

Flags:

-b, --baseURL: hostname (and path) to the root, e.g. http://slackiuxopmaoigo.onion/-d, --destination: filesystem path to write files, to e.g. blog

Environment variables:

- HUGO_TITLE=“Slackiuxopmaoigo.onion”

However, as my website has been exported from WordPress, it does contain a multitude of absolute URL references as part of the content itself. That, unfortunately, won’t be solved by using the --baseURL flag during build time.

To remedy this issue, I’ll have to use the sed command to perform a recursive “search and replace” on selected file extensions. More on that later.

Nginx server blocks

Let’s start with a bare-bones virtual host configuration for slackiuxopmaoigo.onion. The configuration includes a location block for the /wp-content folder. Wp-content is my Hugo static folder, and I don’t want to duplicate the same static content (multimedia) across all three websites. Therefore, all requests for content residing in /wp-content will be served from my blog folder.

server {

listen 80;

server_name slackiuxopmaoigo.onion;

root /var/www/blog.paranoidpenguin.net/html/onionv2;

index index.html;

location /wp-content {

root /var/www/blog.paranoidpenguin.net/html/blog;

}

location / {

try_files $uri $uri/ =404;

}

}

Make sure that the listen directive matches what you specified in your tor configuration. For reference:

# HiddenServicePort x y:z # Redirect requests on port x to the address y:z. HiddenServiceDir /var/lib/tor/hidden_service/ HiddenServicePort 80 127.0.0.1:80

Rinse and repeat for additional domains and restart Nginx.

The build and deploy script

I’ve made a simple bash script to build my clearnet and hidden services. It takes care of running the required Hugo commands and modifies the generated content if needed. Additionally, the script publishes the finalized websites to a remote server using rsync over SSH.

#!/bin/sh

# A Hugo build and deploy script supporting onion domains.

# License: https://blog.paranoidpenguin.net/license/

GOHUGO=$(which hugo)

SSH_SERVER=server4.paranoidpenguin.net

SSH_USER=root

REMOTE_DIR=/var/www/blog.paranoidpenguin.net/html

REMOTE_USER=user

REMOTE_GROUP=group

CONTENT_DIR=${HOME}/workspace/hugo

STATIC_DIR=wp-content

BLOG_URI=https://blog.paranoidpenguin.net

BLOG_DIR=blog

ONION_V2_TITLE=Slackiuxopmaoigo.onion

ONION_V2_URI=http://slackiuxopmaoigo.onion

ONION_V2_DIR=onionv2

ONION_V3_TITLE=Slackiuxopmaoigo.onion

ONION_V3_URI=http://4hpfzoj3tgyp2w7sbe3gnmphqiqpxwwyijyvotamrvojl7pkra7z7byd.onion

ONION_V3_DIR=onionv3

function prepare ()

{

PUBLISH_DIR=$1

if [ -d "${CONTENT_DIR}" ]

then

if [ -d "${CONTENT_DIR}/${PUBLISH_DIR}" ]

then

echo "Recursively deleting ${CONTENT_DIR}/${PUBLISH_DIR}"

rm -Rf ${CONTENT_DIR}/${PUBLISH_DIR}

fi

else

exit 1

fi

}

function clearnet_builder ()

{

PUBLISH_DIR=$1

prepare $PUBLISH_DIR

echo "Building ${CONTENT_DIR}/${PUBLISH_DIR}"

cd $CONTENT_DIR && \

$GOHUGO --destination=${CONTENT_DIR}/${PUBLISH_DIR}

publish $PUBLISH_DIR

}

function onion_builder ()

{

PUBLISH_DIR=$1

ONION_URI=$2

TITLE=$3

prepare $PUBLISH_DIR

BLOG_URI_ESCAPED=(${BLOG_URI//./\\.})

BLOG_URI_ESCAPED=(${BLOG_URI_ESCAPED//\//\\/})

ONION_URI_ESCAPED=(${ONION_URI//./\\.})

ONION_URI_ESCAPED=(${ONION_URI_ESCAPED//\//\\/})

echo "Building ${CONTENT_DIR}/${PUBLISH_DIR}"

cd $CONTENT_DIR && \

HUGO_TITLE=${TITLE} \

$GOHUGO \

--destination=${CONTENT_DIR}/${PUBLISH_DIR} \

--baseURL=${ONION_URI}/ \

--disableKinds=sitemap,RSS

echo "Recursively deleting static content from ${CONTENT_DIR}/${PUBLISH_DIR}/${STATIC_DIR}"

rm -Rf ${CONTENT_DIR}/${PUBLISH_DIR}/${STATIC_DIR}

find ${CONTENT_DIR}/${PUBLISH_DIR} \( -name "*.html" -o -name "*.xml" -o -name "*.css" \) -exec sed -i "s/${BLOG_URI_ESCAPED}/${ONION_URI_ESCAPED}/gI" {} \;

publish $PUBLISH_DIR

}

function publish ()

{

PUBLISH_DIR=$1

echo "Syncing ${CONTENT_DIR}/${PUBLISH_DIR}/ ---> ${REMOTE_DIR}/${PUBLISH_DIR}"

rsync -azzq --chown=${REMOTE_USER}:${REMOTE_GROUP} ${CONTENT_DIR}/${PUBLISH_DIR}/ ${SSH_USER}@${SSH_SERVER}:${REMOTE_DIR}/${PUBLISH_DIR}

echo "Rsync has finished."

sleep 3

clear

}

clear

clearnet_builder $BLOG_DIR

onion_builder $ONION_V2_DIR $ONION_V2_URI $ONION_V2_TITLE

onion_builder $ONION_V3_DIR $ONION_V3_URI $ONION_V3_TITLE

Obviously, a local and remote backup should be in place before running a script you downloaded from a stranger on the Internet ;-)

A quick breakdown of the script

Prepare

Delete the specified publish directory before building a new website.Clearnet builder

Builds my clearnet website using the--destinationflag.Onion builder

This function will build the onion sites and perform a search and replace usingsedto fix absolute URLs referencing my clearnet site.In addition to the

--destinationflag, I’m also using the--baseURLflag to provide the correct hostname (and path) to the root. The--disableKindsflag removes content I don’t want to offer from my onion sites.After building the site, and before running the sed command, I’m escaping dots and slashes from absolute URLs to make it work with

sed. I was unable to make that happen with a oneliner in bash, but I guess it will do.Publish

Establishes an SSH connection (using SSH keys) with the remote server. Rsync is used to publish content to the specified remote folder while passing the chown command for the required user and group.

Addendum

The build scripts are available on Github from this repository.

I’m trying out syntax highlighting with Hugo, feed readers, beware!

Tested on Arch Linux with:

- Hugo Static Site Generator v0.64.0

- Rsync version 3.1.3